Archive for category Coding tricks

A tale of hidden symbols, weakness and gold

Posted by Alessandro Pignotti in Coding tricks on March 8, 2013

A few days ago I was profiling the startup time of the clang compiler. The callgrind report highlighted that a relatively high amount of time was being spent in the _dl_lookop_symbol_x. A quick gdb inspection of the call stack quickly pointed out that the dynamic linker was spending the time doing dynamic relocations. Tons of them.

Using objdump -R clang I found out that a whopping 42% of them actually contained the word “clang” in the mangled symbol names. Demangling the names made it clear that they were mostly definitions of the C++ virtual tables for clang internal classes.

Basically 42% of the relocations happening at run time were actually looking for symbols which are obviously defined inside the clang executable itself. So what’s happening here?

Turns out the problem is a poor interaction between the traditional linker (ld, from the binutils package) and C++‘s ODR rule.

ODR stands for One Definition Rule and says that any entity in C++ code must be defined only once. This is obviously trivial for methods, since if they are defined twice, even in different translation units, there will be an error at link time. There are, though, a few things that are implicity defined by the compiler, for example the virtual tables, which are defined whenever a class using virtual methods is declared. (Here I’m speaking informally, I suspect the terminology is not 100% correct) Since the vtable is potentially defined in more than a translation unit, the symbols for the vtable are flagged as weak. Weak symbols will not conflict with each other and they will be all discarded but one (any one is fine). The surviving one will be used by the end product of the linker.

Unfortunately, the compiler does not know if the compiled object file will be used as part of a dynamic library or as part of a main executable. This means that it has to treat the vtable symbols like any library method, since (if the target is a dynamic library) potentially such symbols may be overridden by another definition in a library or in the main executable. This has to happen since the ODR rule must also apply across the library borders for proper support of a few things, especially exception handling.

So at compile time there is no way around using dynamic relocation on the vtable symbols. A possible workaroud would be to compile all the code with the -fvisibility=hidden flag. Unfortunately this is actually wrong since it may break the ODR rule!

At link time the linker has the chance of eliminating the dynamic relocation, since it does know if the target is a main executable and symbols defined in an executable cannot be overridden by anything (not even by LD_PRELOADed libraries). Unfortunately the traditional ld linker does not apply this optimization.

The new gold linker, originally developed at Google, does tough! It is able to completely eradicate the dynamic relocations to internally defined symbols, effectively reducing the load time.

Moral of the tale: use the gold linker. It should work in most cases (I think kernel code is a notable exception) and generate faster executables while consuming less memory and cpu time during linking.

And please, dear debian/ubuntu maintainers, link clang using gold.

The quest for graphics performance: part II

Posted by Alessandro Pignotti in Coding tricks, Insane Projects on March 16, 2010

I’d like to talk a bit about the architecture I’ve using to efficiently render the video stream in Lightspark. As often happens the key in high performance computing is using the right tools for each job. First of all video decoding and rendering are asynchronous and executed by different threads.

Decoding itself it’s done by the widely known FFMpeg, no special tricks are played here. So the starting condition of the optimized fast path is a decoded frame data structure. The data structure is short lived and it is overwritten by the next decoded frame, so it must be copied to a more stable buffer. The decoding thread maintains a short array of decoded frames ready to be rendered, to account for variance in the decoding delay. The decoded frame is in YUV420 format, this means that resolution of color data is one half of the resolution of the luminance channel. The data is returned by FFmpeg as 3 distinct buffers, one for each of the YUV channels, so we actually save 3 buffers per frame. This copy is necessary and it’s the only one that will be done on the data.

Rendering is done using a textured quad and texture data is loaded using OpenGL Pixel buffer objects (PBOs). PBOs are memory buffers managed by the GL and it’s possible to load texture data from them. Unfortunately they must be explicitly mapped to the client address space to be accessed, and unmapped when the updated. The advantage is that data transfer between PBOs and video or texture memory will be done by the GL using asynchronous DMA transfers. Using 2 PBOs it’s possible to guarantee a continuous stream of data to video memory: while one PBOs is being copied to texture memory by DMA, new data is been computed and transferred to the other using the CPU. This usage pattern is called streaming textures.

In this case such data is the next frame, taken from the decoded frames buffer. Textures data for OpenGL must be provided in planar form. So we must pack a 1-buffer-per-channel frame in a single buffer. This can be done in a zero-copy fashion using instruction provided by the SSE2 extension. Data is loaded in 128 bit chunks from each of the Y, U and V channels, then using register only operations it is correctly packed and padded. Results are written back using non-temporal moves. This means that the processor may feel free to postpone the actual commitment of data to memory, for example to exploit burst transfers on the bus. If we ever want to be sure that the changes has been committed in memory we have to call the sfence instruction. For more information see the Intel reference manuals on movapd, movntpd, sfence, punpcklb.

The result is a single buffer with the format YUV0, padding has been added to increase texture transfer efficiency, as the video cards internally works with 32-bit data anyway. The destination buffer is one of the PBOs so, at the end of the conversion routine, data will be transferred to video memory using DMA.

Using the streaming texture technique and SSE2 data packing we managed to efficiently move the frame data to texture memory, but it’s still in YUV format. Conversion to the RGB color space is basically a linear algebra operation, so it’s ideal to offload this computation to a pixel shader.

Allocating aligned memory

Posted by Alessandro Pignotti in Coding tricks on October 29, 2009

Just a quick note that may be useful to someone else. As you may know SSE2 introduced a new instruction: MOVDQA (MOVe Double Quadword Aligned). This is used to move 128 bit (16 bytes) of data from/to memory/xmm registers. This instruction only works if the data is aligned the the 16 byte boundary. There is also another instruction for the unaligned case, but the aligned version is way faster. So let’s summarize some techniques to get an aligned memory area

- For local, static and member variables you can append __attribute__ (( aligned (16 ) ) to the type definition. Example:

- For dynamically allocated memory the usual malloc is not enough, but there is a posix_memalign which has the semantics that we need. It is defined as:

struct A { int val; } __attribute__ ((aligned ( 16 ) );

int posix_memalign(void **memptr, size_t alignment, size_t size);

So we have to pass a pointer to the pointer that will receive the newly allocated memory, the required alignment (which has to be a power of two) and the allocation size. Memory allocated this way can (at least on the glibc implementation) be freed using the usual free function.

The quest for graphics performance: part I

Posted by Alessandro Pignotti in Coding tricks on June 27, 2009

Developing and optimizing Lightspark, the modern Flash player, I’m greatly expanding my knowledge and understanding of GPU internals. In the last few days I’ve managed to find out a couple of nice tricks that boosted up performance and, as nice side effects, saved CPU time and added features ![]()

First of all, I’d like to introduce a bit of the Lightspark graphics architecture

The project is designed from the ground up to make use of the features offered by modern graphics hardware. Namely 3D acceleration and programmable shaders. The Flash file format encodes the geometries to be drawn as set of edges. This representation is quite different from the one understood by GPUs. So the geometries are first triangulated (reduced to a set of triangles). This operation is done on the CPU and is quite computationally intensive, but the results are cached, so overall this does not hit performance.

Moreover Flash offer several different fill styles that should be applied on geometry, for example solid color and various kind of gradients. Lightspark handles all those possibilities using a single fragment shader, a little piece of code that is invoked on every pixel to compute the desired color. Of course the shader has to know about the current fill style. This information along with several other parameters could be passed with different methods. More on this on the next issue.

There is one peculiar thing about the shader though, let’s look at a simple pseudo code:

gl_FragColor=solid_color()*selector[0]+linear_gradient()*selector[1]+circular_gradient()*selector[2]...;

Selector is a binary array, the only allowed values are zero or one. Moreover only one value is one. This means that the current fragment (pixel) color is computed for every possible fill style and only afterward the correct result is selected. This may look like a waste of computing power, but it is actually more efficient than something like this:

if(selector[0])

gl_FragColor=solid_color();

else if(selector[1])

gl_FragColot=linear_gradient();

...

This counter intuitive fact comes from the nature of the graphics hardware. GPUs are very different from CPUs and are capable of cruching tons of vectorial operations blindingly fast. But they totally fall down on their knees when encountering branches in the code. This is actually quite common on deeply pipelined architecture which misses complex branch prediction circuitry, not only GPUs but also number crunching devices and multimedia monsters such as IBM Cell. Keep this in mind when working on such platforms.

Three GDB tricks for the masses

Posted by Alessandro Pignotti in Coding tricks on May 8, 2009

The GNU Debugger is a very powerful and fearsome beast. It seems that I’ve found a couple of useful tricks which are not popular as they should.

- The watch command: any script kiddie knows about breakpoints. Watchpoints are based on the same concept applied to not to code, but to data memory. You can set a watchpoint on a variable using the following syntax:

watch var.It is also possible to watch a specific memory address with the syntax:watch *0xdeadbeaf. A watchpoint triggers when the contents of the memory location changes, so they are useful when trying to find out when a variable is modified. (remember that in C++ you should always use private and protected sections to stop variables from being accessed/modified outside the expected code flow). It is also possible to set read watchpoints using the rwatch command, which triggers when the location is accessed. This feature is mainly useful when reverse engineering compiled code, to find out which code path make use/are influenced by a certain variable/memory location. The major drawback of watchpoints is that common hardware support only a few of them. When gdb runs out of hardware watchpoints it resorts to software emulation, which is very slow, and not possible at all for the read ones. This also means that putting watchpoints on big structures and classes is highly discorauged. - set print object: If you’ve ever made extensive use of polymorphic classes or otherwise complex class hierarchies, you will find this setting very useful. The print command, when printing pointers of base classes, will take look at the virtual table to find out the actual object type, and print it beside the address. Moreover when dereferencing pointers it will print the full object contents, not only the base class members.

- info threads command: When you face a deadlock in your multithreaded code, the first step to understand the problem is to find out which threads are blocked. The deadlock is often not easily reproducible, so should at first attach gdb the the process using the attach <pid> command, the typing info threads you will get for each thread the function name where it is currently stopped. Threads involved in a deadlock conditions are usually stopped in sem_wait or similar semaphore related functions

Well... I guess I’m using gdb way too much, maybe I’m not a good programmer ![]()

Fun with ptrace: system emulation

Posted by Alessandro Pignotti in Coding tricks on May 4, 2009

The more I explore the interfaces deeply hidden in the linux kernel, the more a new world of opportunity opens. Today, while taking a look at the ptrace API, I found out the PTRACE_SYSEMU option.

The more I explore the interfaces deeply hidden in the linux kernel, the more a new world of opportunity opens. Today, while taking a look at the ptrace API, I found out the PTRACE_SYSEMU option.

But what is ptrace? It’s a kernel interface to check and manipulate the information that crosses the user space-kernel space frontier. Its main... principal... only user is gdb usually. The PTRACE_SYSEMU option is quite peculiar, it was implemented mainly for the user mode linux project. It allows not only to monitor the system calls invoked by a process, but also to replace the system call sematics.

So... how could this be useful? For example to experiment with different auditing/sandboxing strategies, or to build compatility layers at the system call level... but who knows what kind of funny things could be done!

A Nice Little Book of Semaphores

Posted by Jacopo Corbetta in Coding tricks on March 15, 2009

We’re currently busy working on assignments for the internal exams here at Sant’Anna School1 and we (well, Mirko) found a nice book for our parallel programming project. It’s “A Little Book of Semaphores”, freely available here.

It’s a very nice collection of concurrency problems, it deals with pretty much every one you could think of. So thank you professor Downey!

- Well, we’re also working on the usual stuff (ways to make big money, strange web 2.0 applications, high-performance-emulators, big robots, small robots, our many-times-acclaimed editor for Wikipedia, world domination, ...). [↩]

Case Study: Real Time video encoding on Via Epia, Part II

Posted by Alessandro Pignotti in Coding tricks on February 6, 2009

Once upon a time, there was the glorious empire of DOS. It was a mighty and fearful time, when people could still talk to the heart of the machines and write code in the forgotten language of assembly. We are now lucky enough to have powerful compilers that do most of this low level work for us, and hand crafting assembly code is not needed anyomore. But the introduction of SIMD (Single Instruction Multiple Data) within the x86 instruction set made this ancient ability useful again.

MMX/SSE are a very powerful and dangerous beast. We had almost no previous experience with low level assembly programming. And a critical problem to solve: how to convert from RGB colorspace to YUV, and do it fast on our very limited board.

As I’ve wrote on the previous article the conversion is conceptually simple and it’s basically a 3x3 matrix multiplication. That’s it, do 9 scalar products and you’re done!

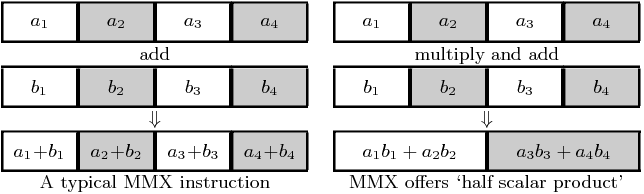

SIMD instructions operate on packed data: this means that more than one (usually 2/4) value is stored in a single register and operations on them are parallelized. For example you can do four sums with a single operation.

Unfortunately MMX/SSE is a vertical instruction set. This means you can do very little with the data packed in a single register. There are however instructions that do ‘half a scalar product’. We found out an approach to maximize the throughput using this.

Our camera, a Pointgrey Bumblebee, delivers raw sensor data via Firewire, arranged in a pattern called Bayer Encoding. Color data is arranged in 2x2 cells, and there are twice the sensors for green than for the the other colors, since the human eye is more sensible to that color. We at first rearrange the input data in a strange but useful pattern, as in picture. The following assembler code then does the magic, two pixel at a time.

//Loading mm0 with 0, this will be useful to interleave data byte pxor %mm0,%mm0 //Loading 8 bytes from buffer. Assume %eax contains the address of the input buffer //One out of four bytes are zeros, but the overhead is well balanced by the aligned memory access. //Those zeros will also be useful later on movd (%eax),%mm1 // < R1, G1, B2, 0> movd 4(%eax),%mm2 // < B1, 0, R2, G2> //Unpacking bytes to words, MMX registers are 8 bytes wide so we can interleave the data bytes with zeros. punpcklbw %mm0,%mm1 punpcklbw %mm0,%mm2 //We need triple copy of each input, one for each output channel movq %mm1,%mm3 // < R1, G1, B2, 0> movq %mm2,%mm4 // < B1, 0, R2, G2> movq %mm1,%mm5 // < R1, G1, B2, 0> movq %mm2,%mm6 // < B1, 0, R2, G2> //Multiply and accumulate, this does only half the work. //We multiply the data with the right constants and sums the results in pair. //The consts are four packed 16bit values and contains the constants scaled by 32768. //[YUV]const and [YUV]const_inv are the same apart from being arrenged to suit the layout of the even/odd inputs pmaddwd Yconst,%mm1 // < Y1*R1 + Y2*G1, Y3*B2 + 0> pmaddwd Uconst,%mm3 // < U1*R1 + U2*G1, U3*B2 + 0> pmaddwd Vconst,%mm5 // < V1*R1 + V2*G1, V3*B2 + 0> pmaddwd Yconst_inv,%mm2 // < Y3*B1 + 0, Y1*R2 + Y2*G2> pmaddwd Uconst_inv,%mm4 // < U3*B1 + 0, U1*R2 + U2*G2> pmaddwd Vconst_inv,%mm6 // < V3*B1 + 0, V1*R2 + V2*G2> //Add registers in pairs to get the final scalar product. The results are two packed pixel for each output channel and still scaled by 32768 paddd %mm2,%mm1 // < Y1*R1 + Y2*G1 + Y3*B1, Y1*R2, Y2*G2 + Y3*B2> paddd %mm4,%mm3 // < U1*R1 + U2*G1 + U3*B1, U1*R2, U2*G2 + U3*B2> paddd %mm6,%mm5 // < V1*R1 + V2*G1 + V3*B1, V1*R2, V2*G2 + V3*B2> //We shift right by 15 bits to get rid of the scaling psrad $15,%mm1 psrad $15,%mm3 psrad $15,%mm5 //const128 is two packed 32bit values, this is the offset to be added to the U/V channnels //const128: // .long 128 // .long 128 paddd const128,%mm3 paddd const128,%mm5 //We repack the resulting dwords to bytes packssdw %mm0,%mm1 packssdw %mm0,%mm3 packssdw %mm0,%mm5 packuswb %mm0,%mm1 packuswb %mm0,%mm3 packuswb %mm0,%mm5 //We copy the byte pairs to the destination buffers, assume %ebx, %esi and %edi contains the address of such buffers movd %mm1,%ecx movw %cx,(%ebx) movd %mm3,%ecx movb %cl,(%esi) movd %mm5,%ecx movb %cl,(%edi) |

Simple right? ![]()

Coding this was difficult but in the end really interesting. And even more important, this was really fast and we had no problem using this during the robot competition itself. Read the rest of this entry »

Case Study: Real Time video encoding on Via Epia, Part I

Posted by Alessandro Pignotti in Coding tricks on February 2, 2009

During the pESApod project we worked on the telecommunication and telemetry system for the robot. The computing infrastructure was very complex (maybe too much). We had three Altera FPGA on board and a very low power consumption PC, a VIA Epia board. Using devices that are light on power needs is a must for mobile robots. But we ended up using more power for the electronics than for the motors. I guess the Altera’s boards are very heavy on power, being prototyping devices.

Anyway the Epia with the onboard Eden processor is a very nice machine. It is fully x86 compatible, and we managed to run linux on it without problems. It has indeed a very low power footprint, but the performance tradeoff for this was quite heavy. The original plan was to have four video streams from the robot. A pair of proximity cameras for sample gathering and a stereocam for navigation and environment mapping. We used at the end only the stereocam, but even encoding only those two video streams on the Epia was really difficult.

We used libFAME for the encoding. The name means Fast Assembly MPEG Encoder. It is fast indeed, but it is also very poorly mantained. So we had some problems at firts to make it work. The library accept frames encoded in YUV format, but our camera sensor data was in bayer encoding. So we had to write the format conversion routine.

RGB to YUV using matrix notation

The conversion from RGB color space to YUV is quite simple and can be done using linear algebra. Our first approach was really naive and based on floating point.

// RGB* rgb; // YUV* yuv; yuv[i].y=0.299*rgb[i].r + 0.114*rgb[i].g + 0.587*rgb[i].b; yuv[i].u=128 - 0.168736*rgb[i].r - 0.331264*rgb[i].g + 0.5*rgb[i].b; yuv[i].v=128 + 0.5*rgb[i].r - 0.418688*rgb[i].g + 0.081312*rgb[i].b; |

This was really slow. We later discovered to our disappointment that the FPU was clocked at half the speed of the processor. So we changed the implementation to integer math. The result was something like this:

yuv[i].y=(299*rgb[i].r + 0.114*rgb[i].g + 0.587*rgb[i].b)/1000; yuv[i].u=128 - (169*rgb[i].r - 331*rgb[i].g + 500*rgb[i].b)/1000; yuv[i].v=128 + (500*rgb[i].r - 419*rgb[i].g + 81*rgb[i].b)/1000; |

This solution almost doubled the framerate. But it was still not enough and we had to dive deep in the magic world of MMX/SSE instructions. The details for the next issue.