Posts Tagged SSE

Allocating aligned memory

Posted by Alessandro Pignotti in Coding tricks on October 29, 2009

Just a quick note that may be useful to someone else. As you may know SSE2 introduced a new instruction: MOVDQA (MOVe Double Quadword Aligned). This is used to move 128 bit (16 bytes) of data from/to memory/xmm registers. This instruction only works if the data is aligned the the 16 byte boundary. There is also another instruction for the unaligned case, but the aligned version is way faster. So let’s summarize some techniques to get an aligned memory area

- For local, static and member variables you can append __attribute__ (( aligned (16 ) ) to the type definition. Example:

- For dynamically allocated memory the usual malloc is not enough, but there is a posix_memalign which has the semantics that we need. It is defined as:

struct A { int val; } __attribute__ ((aligned ( 16 ) );

int posix_memalign(void **memptr, size_t alignment, size_t size);

So we have to pass a pointer to the pointer that will receive the newly allocated memory, the required alignment (which has to be a power of two) and the allocation size. Memory allocated this way can (at least on the glibc implementation) be freed using the usual free function.

Case Study: Real Time video encoding on Via Epia, Part II

Posted by Alessandro Pignotti in Coding tricks on February 6, 2009

Once upon a time, there was the glorious empire of DOS. It was a mighty and fearful time, when people could still talk to the heart of the machines and write code in the forgotten language of assembly. We are now lucky enough to have powerful compilers that do most of this low level work for us, and hand crafting assembly code is not needed anyomore. But the introduction of SIMD (Single Instruction Multiple Data) within the x86 instruction set made this ancient ability useful again.

MMX/SSE are a very powerful and dangerous beast. We had almost no previous experience with low level assembly programming. And a critical problem to solve: how to convert from RGB colorspace to YUV, and do it fast on our very limited board.

As I’ve wrote on the previous article the conversion is conceptually simple and it’s basically a 3x3 matrix multiplication. That’s it, do 9 scalar products and you’re done!

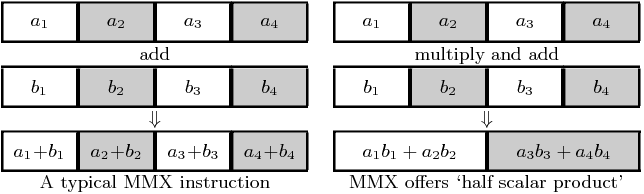

SIMD instructions operate on packed data: this means that more than one (usually 2/4) value is stored in a single register and operations on them are parallelized. For example you can do four sums with a single operation.

Unfortunately MMX/SSE is a vertical instruction set. This means you can do very little with the data packed in a single register. There are however instructions that do ‘half a scalar product’. We found out an approach to maximize the throughput using this.

Our camera, a Pointgrey Bumblebee, delivers raw sensor data via Firewire, arranged in a pattern called Bayer Encoding. Color data is arranged in 2x2 cells, and there are twice the sensors for green than for the the other colors, since the human eye is more sensible to that color. We at first rearrange the input data in a strange but useful pattern, as in picture. The following assembler code then does the magic, two pixel at a time.

//Loading mm0 with 0, this will be useful to interleave data byte pxor %mm0,%mm0 //Loading 8 bytes from buffer. Assume %eax contains the address of the input buffer //One out of four bytes are zeros, but the overhead is well balanced by the aligned memory access. //Those zeros will also be useful later on movd (%eax),%mm1 // < R1, G1, B2, 0> movd 4(%eax),%mm2 // < B1, 0, R2, G2> //Unpacking bytes to words, MMX registers are 8 bytes wide so we can interleave the data bytes with zeros. punpcklbw %mm0,%mm1 punpcklbw %mm0,%mm2 //We need triple copy of each input, one for each output channel movq %mm1,%mm3 // < R1, G1, B2, 0> movq %mm2,%mm4 // < B1, 0, R2, G2> movq %mm1,%mm5 // < R1, G1, B2, 0> movq %mm2,%mm6 // < B1, 0, R2, G2> //Multiply and accumulate, this does only half the work. //We multiply the data with the right constants and sums the results in pair. //The consts are four packed 16bit values and contains the constants scaled by 32768. //[YUV]const and [YUV]const_inv are the same apart from being arrenged to suit the layout of the even/odd inputs pmaddwd Yconst,%mm1 // < Y1*R1 + Y2*G1, Y3*B2 + 0> pmaddwd Uconst,%mm3 // < U1*R1 + U2*G1, U3*B2 + 0> pmaddwd Vconst,%mm5 // < V1*R1 + V2*G1, V3*B2 + 0> pmaddwd Yconst_inv,%mm2 // < Y3*B1 + 0, Y1*R2 + Y2*G2> pmaddwd Uconst_inv,%mm4 // < U3*B1 + 0, U1*R2 + U2*G2> pmaddwd Vconst_inv,%mm6 // < V3*B1 + 0, V1*R2 + V2*G2> //Add registers in pairs to get the final scalar product. The results are two packed pixel for each output channel and still scaled by 32768 paddd %mm2,%mm1 // < Y1*R1 + Y2*G1 + Y3*B1, Y1*R2, Y2*G2 + Y3*B2> paddd %mm4,%mm3 // < U1*R1 + U2*G1 + U3*B1, U1*R2, U2*G2 + U3*B2> paddd %mm6,%mm5 // < V1*R1 + V2*G1 + V3*B1, V1*R2, V2*G2 + V3*B2> //We shift right by 15 bits to get rid of the scaling psrad $15,%mm1 psrad $15,%mm3 psrad $15,%mm5 //const128 is two packed 32bit values, this is the offset to be added to the U/V channnels //const128: // .long 128 // .long 128 paddd const128,%mm3 paddd const128,%mm5 //We repack the resulting dwords to bytes packssdw %mm0,%mm1 packssdw %mm0,%mm3 packssdw %mm0,%mm5 packuswb %mm0,%mm1 packuswb %mm0,%mm3 packuswb %mm0,%mm5 //We copy the byte pairs to the destination buffers, assume %ebx, %esi and %edi contains the address of such buffers movd %mm1,%ecx movw %cx,(%ebx) movd %mm3,%ecx movb %cl,(%esi) movd %mm5,%ecx movb %cl,(%edi) |

Simple right? ![]()

Coding this was difficult but in the end really interesting. And even more important, this was really fast and we had no problem using this during the robot competition itself. Read the rest of this entry »