Archive for February, 2009

Samba upgrade headache

Posted by Alessandro Pignotti in Sysadmin Kung Fu on February 11, 2009

Even a Debian machine when it’s not nursed by the loving hands of a system administrator for a long time could be a source of problems. I found myself upgrading samba from version 3.0.24 to 3.2.5 all at once, on our main fileserver. And suddenly all the windows machines here at school could not access the shares anymore. This problems seems not to be documented anywere. So I took a deep breath and start scrolling the huge samba changelog between the old and the new version. However I was lucky, the problematic change happened at version 3.0.25a. It seems that the default value of the msdsf root option changed from true to false, but Windows cached this information. To solve the problem the solution is the usual: just reboot windows.

Samba upgrade headache

Posted by Alessandro Pignotti in Sysadmin Kung Fu on February 11, 2009

Even a Debian machine when it’s not nursed by the loving hands of a system administrator for a long time could be a source of problems. I found myself upgrading samba from version 3.0.24 to 3.2.5 all at once, on our main fileserver. And suddenly all the windows machines here at school could not access the shares anymore. This problems seems not to be documented anywere. So I took a deep breath and start scrolling the huge samba changelog between the old and the new version. However I was lucky, the problematic change happened at version 3.0.25a. It seems that the default value of the msdsf root option changed from true to false, but Windows cached this information. To solve the problem the solution is the usual: just reboot windows.

Case Study: Real Time video encoding on Via Epia, Part II

Posted by Alessandro Pignotti in Coding tricks on February 6, 2009

Once upon a time, there was the glorious empire of DOS. It was a mighty and fearful time, when people could still talk to the heart of the machines and write code in the forgotten language of assembly. We are now lucky enough to have powerful compilers that do most of this low level work for us, and hand crafting assembly code is not needed anyomore. But the introduction of SIMD (Single Instruction Multiple Data) within the x86 instruction set made this ancient ability useful again.

MMX/SSE are a very powerful and dangerous beast. We had almost no previous experience with low level assembly programming. And a critical problem to solve: how to convert from RGB colorspace to YUV, and do it fast on our very limited board.

As I’ve wrote on the previous article the conversion is conceptually simple and it’s basically a 3x3 matrix multiplication. That’s it, do 9 scalar products and you’re done!

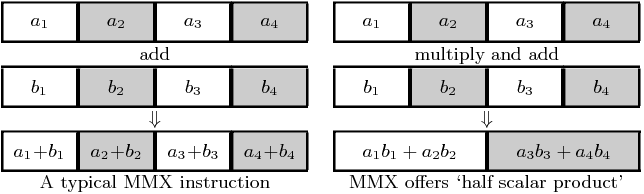

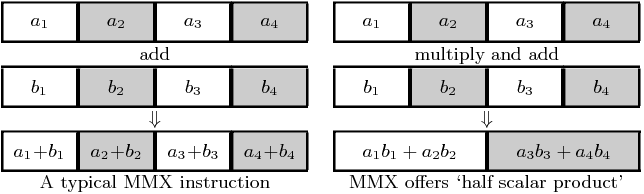

SIMD instructions operate on packed data: this means that more than one (usually 2/4) value is stored in a single register and operations on them are parallelized. For example you can do four sums with a single operation.

Unfortunately MMX/SSE is a vertical instruction set. This means you can do very little with the data packed in a single register. There are however instructions that do ‘half a scalar product’. We found out an approach to maximize the throughput using this.

Our camera, a Pointgrey Bumblebee, delivers raw sensor data via Firewire, arranged in a pattern called Bayer Encoding. Color data is arranged in 2x2 cells, and there are twice the sensors for green than for the the other colors, since the human eye is more sensible to that color. We at first rearrange the input data in a strange but useful pattern, as in picture. The following assembler code then does the magic, two pixel at a time.

//Loading mm0 with 0, this will be useful to interleave data byte pxor %mm0,%mm0 //Loading 8 bytes from buffer. Assume %eax contains the address of the input buffer //One out of four bytes are zeros, but the overhead is well balanced by the aligned memory access. //Those zeros will also be useful later on movd (%eax),%mm1 // < R1, G1, B2, 0> movd 4(%eax),%mm2 // < B1, 0, R2, G2> //Unpacking bytes to words, MMX registers are 8 bytes wide so we can interleave the data bytes with zeros. punpcklbw %mm0,%mm1 punpcklbw %mm0,%mm2 //We need triple copy of each input, one for each output channel movq %mm1,%mm3 // < R1, G1, B2, 0> movq %mm2,%mm4 // < B1, 0, R2, G2> movq %mm1,%mm5 // < R1, G1, B2, 0> movq %mm2,%mm6 // < B1, 0, R2, G2> //Multiply and accumulate, this does only half the work. //We multiply the data with the right constants and sums the results in pair. //The consts are four packed 16bit values and contains the constants scaled by 32768. //[YUV]const and [YUV]const_inv are the same apart from being arrenged to suit the layout of the even/odd inputs pmaddwd Yconst,%mm1 // < Y1*R1 + Y2*G1, Y3*B2 + 0> pmaddwd Uconst,%mm3 // < U1*R1 + U2*G1, U3*B2 + 0> pmaddwd Vconst,%mm5 // < V1*R1 + V2*G1, V3*B2 + 0> pmaddwd Yconst_inv,%mm2 // < Y3*B1 + 0, Y1*R2 + Y2*G2> pmaddwd Uconst_inv,%mm4 // < U3*B1 + 0, U1*R2 + U2*G2> pmaddwd Vconst_inv,%mm6 // < V3*B1 + 0, V1*R2 + V2*G2> //Add registers in pairs to get the final scalar product. The results are two packed pixel for each output channel and still scaled by 32768 paddd %mm2,%mm1 // < Y1*R1 + Y2*G1 + Y3*B1, Y1*R2, Y2*G2 + Y3*B2> paddd %mm4,%mm3 // < U1*R1 + U2*G1 + U3*B1, U1*R2, U2*G2 + U3*B2> paddd %mm6,%mm5 // < V1*R1 + V2*G1 + V3*B1, V1*R2, V2*G2 + V3*B2> //We shift right by 15 bits to get rid of the scaling psrad $15,%mm1 psrad $15,%mm3 psrad $15,%mm5 //const128 is two packed 32bit values, this is the offset to be added to the U/V channnels //const128: // .long 128 // .long 128 paddd const128,%mm3 paddd const128,%mm5 //We repack the resulting dwords to bytes packssdw %mm0,%mm1 packssdw %mm0,%mm3 packssdw %mm0,%mm5 packuswb %mm0,%mm1 packuswb %mm0,%mm3 packuswb %mm0,%mm5 //We copy the byte pairs to the destination buffers, assume %ebx, %esi and %edi contains the address of such buffers movd %mm1,%ecx movw %cx,(%ebx) movd %mm3,%ecx movb %cl,(%esi) movd %mm5,%ecx movb %cl,(%edi) |

Simple right? ![]()

Coding this was difficult but in the end really interesting. And even more important, this was really fast and we had no problem using this during the robot competition itself. Read the rest of this entry »

Case Study: Real Time video encoding on Via Epia, Part II

Posted by Alessandro Pignotti in Coding tricks on February 6, 2009

Once upon a time, there was the glorious empire of DOS. It was a mighty and fearful time, when people could still talk to the heart of the machines and write code in the forgotten language of assembly. We are now lucky enough to have powerful compilers that do most of this low level work for us, and hand crafting assembly code is not needed anyomore. But the introduction of SIMD (Single Instruction Multiple Data) within the x86 instruction set made this ancient ability useful again.

MMX/SSE are a very powerful and dangerous beast. We had almost no previous experience with low level assembly programming. And a critical problem to solve: how to convert from RGB colorspace to YUV, and do it fast on our very limited board.

As I’ve wrote on the previous article the conversion is conceptually simple and it’s basically a 3x3 matrix multiplication. That’s it, do 9 scalar products and you’re done!

SIMD instructions operate on packed data: this means that more than one (usually 2/4) value is stored in a single register and operations on them are parallelized. For example you can do four sums with a single operation.

Unfortunately MMX/SSE is a vertical instruction set. This means you can do very little with the data packed in a single register. There are however instructions that do ‘half a scalar product’. We found out an approach to maximize the throughput using this.

Our camera, a Pointgrey Bumblebee, delivers raw sensor data via Firewire, arranged in a pattern called Bayer Encoding. Color data is arranged in 2x2 cells, and there are twice the sensors for green than for the the other colors, since the human eye is more sensible to that color. We at first rearrange the input data in a strange but useful pattern, as in picture. The following assembler code then does the magic, two pixel at a time.

//Loading mm0 with 0, this will be useful to interleave data byte pxor %mm0,%mm0 //Loading 8 bytes from buffer. Assume %eax contains the address of the input buffer //One out of four bytes are zeros, but the overhead is well balanced by the aligned memory access. //Those zeros will also be useful later on movd (%eax),%mm1 // < R1, G1, B2, 0> movd 4(%eax),%mm2 // < B1, 0, R2, G2> //Unpacking bytes to words, MMX registers are 8 bytes wide so we can interleave the data bytes with zeros. punpcklbw %mm0,%mm1 punpcklbw %mm0,%mm2 //We need triple copy of each input, one for each output channel movq %mm1,%mm3 // < R1, G1, B2, 0> movq %mm2,%mm4 // < B1, 0, R2, G2> movq %mm1,%mm5 // < R1, G1, B2, 0> movq %mm2,%mm6 // < B1, 0, R2, G2> //Multiply and accumulate, this does only half the work. //We multiply the data with the right constants and sums the results in pair. //The consts are four packed 16bit values and contains the constants scaled by 32768. //[YUV]const and [YUV]const_inv are the same apart from being arrenged to suit the layout of the even/odd inputs pmaddwd Yconst,%mm1 // < Y1*R1 + Y2*G1, Y3*B2 + 0> pmaddwd Uconst,%mm3 // < U1*R1 + U2*G1, U3*B2 + 0> pmaddwd Vconst,%mm5 // < V1*R1 + V2*G1, V3*B2 + 0> pmaddwd Yconst_inv,%mm2 // < Y3*B1 + 0, Y1*R2 + Y2*G2> pmaddwd Uconst_inv,%mm4 // < U3*B1 + 0, U1*R2 + U2*G2> pmaddwd Vconst_inv,%mm6 // < V3*B1 + 0, V1*R2 + V2*G2> //Add registers in pairs to get the final scalar product. The results are two packed pixel for each output channel and still scaled by 32768 paddd %mm2,%mm1 // < Y1*R1 + Y2*G1 + Y3*B1, Y1*R2, Y2*G2 + Y3*B2> paddd %mm4,%mm3 // < U1*R1 + U2*G1 + U3*B1, U1*R2, U2*G2 + U3*B2> paddd %mm6,%mm5 // < V1*R1 + V2*G1 + V3*B1, V1*R2, V2*G2 + V3*B2> //We shift right by 15 bits to get rid of the scaling psrad $15,%mm1 psrad $15,%mm3 psrad $15,%mm5 //const128 is two packed 32bit values, this is the offset to be added to the U/V channnels //const128: // .long 128 // .long 128 paddd const128,%mm3 paddd const128,%mm5 //We repack the resulting dwords to bytes packssdw %mm0,%mm1 packssdw %mm0,%mm3 packssdw %mm0,%mm5 packuswb %mm0,%mm1 packuswb %mm0,%mm3 packuswb %mm0,%mm5 //We copy the byte pairs to the destination buffers, assume %ebx, %esi and %edi contains the address of such buffers movd %mm1,%ecx movw %cx,(%ebx) movd %mm3,%ecx movb %cl,(%esi) movd %mm5,%ecx movb %cl,(%edi) |

Simple right? ![]()

Coding this was difficult but in the end really interesting. And even more important, this was really fast and we had no problem using this during the robot competition itself. Read the rest of this entry »

Case Study: Real Time video encoding on Via Epia, Part I

Posted by Alessandro Pignotti in Coding tricks on February 2, 2009

During the pESApod project we worked on the telecommunication and telemetry system for the robot. The computing infrastructure was very complex (maybe too much). We had three Altera FPGA on board and a very low power consumption PC, a VIA Epia board. Using devices that are light on power needs is a must for mobile robots. But we ended up using more power for the electronics than for the motors. I guess the Altera’s boards are very heavy on power, being prototyping devices.

Anyway the Epia with the onboard Eden processor is a very nice machine. It is fully x86 compatible, and we managed to run linux on it without problems. It has indeed a very low power footprint, but the performance tradeoff for this was quite heavy. The original plan was to have four video streams from the robot. A pair of proximity cameras for sample gathering and a stereocam for navigation and environment mapping. We used at the end only the stereocam, but even encoding only those two video streams on the Epia was really difficult.

We used libFAME for the encoding. The name means Fast Assembly MPEG Encoder. It is fast indeed, but it is also very poorly mantained. So we had some problems at firts to make it work. The library accept frames encoded in YUV format, but our camera sensor data was in bayer encoding. So we had to write the format conversion routine.

RGB to YUV using matrix notation

The conversion from RGB color space to YUV is quite simple and can be done using linear algebra. Our first approach was really naive and based on floating point.

// RGB* rgb; // YUV* yuv; yuv[i].y=0.299*rgb[i].r + 0.114*rgb[i].g + 0.587*rgb[i].b; yuv[i].u=128 - 0.168736*rgb[i].r - 0.331264*rgb[i].g + 0.5*rgb[i].b; yuv[i].v=128 + 0.5*rgb[i].r - 0.418688*rgb[i].g + 0.081312*rgb[i].b; |

This was really slow. We later discovered to our disappointment that the FPU was clocked at half the speed of the processor. So we changed the implementation to integer math. The result was something like this:

yuv[i].y=(299*rgb[i].r + 0.114*rgb[i].g + 0.587*rgb[i].b)/1000; yuv[i].u=128 - (169*rgb[i].r - 331*rgb[i].g + 500*rgb[i].b)/1000; yuv[i].v=128 + (500*rgb[i].r - 419*rgb[i].g + 81*rgb[i].b)/1000; |

This solution almost doubled the framerate. But it was still not enough and we had to dive deep in the magic world of MMX/SSE instructions. The details for the next issue.

Case Study: Real Time video encoding on Via Epia, Part I

Posted by Alessandro Pignotti in Coding tricks on February 2, 2009

During the pESApod project we worked on the telecommunication and telemetry system for the robot. The computing infrastructure was very complex (maybe too much). We had three Altera FPGA on board and a very low power consumption PC, a VIA Epia board. Using devices that are light on power needs is a must for mobile robots. But we ended up using more power for the electronics than for the motors. I guess the Altera’s boards are very heavy on power, being prototyping devices.

Anyway the Epia with the onboard Eden processor is a very nice machine. It is fully x86 compatible, and we managed to run linux on it without problems. It has indeed a very low power footprint, but the performance tradeoff for this was quite heavy. The original plan was to have four video streams from the robot. A pair of proximity cameras for sample gathering and a stereocam for navigation and environment mapping. We used at the end only the stereocam, but even encoding only those two video streams on the Epia was really difficult.

We used libFAME for the encoding. The name means Fast Assembly MPEG Encoder. It is fast indeed, but it is also very poorly mantained. So we had some problems at firts to make it work. The library accept frames encoded in YUV format, but our camera sensor data was in bayer encoding. So we had to write the format conversion routine.

RGB to YUV using matrix notation

The conversion from RGB color space to YUV is quite simple and can be done using linear algebra. Our first approach was really naive and based on floating point.

// RGB* rgb; // YUV* yuv; yuv[i].y=0.299*rgb[i].r + 0.114*rgb[i].g + 0.587*rgb[i].b; yuv[i].u=128 - 0.168736*rgb[i].r - 0.331264*rgb[i].g + 0.5*rgb[i].b; yuv[i].v=128 + 0.5*rgb[i].r - 0.418688*rgb[i].g + 0.081312*rgb[i].b; |

This was really slow. We later discovered to our disappointment that the FPU was clocked at half the speed of the processor. So we changed the implementation to integer math. The result was something like this:

yuv[i].y=(299*rgb[i].r + 0.114*rgb[i].g + 0.587*rgb[i].b)/1000; yuv[i].u=128 - (169*rgb[i].r - 331*rgb[i].g + 500*rgb[i].b)/1000; yuv[i].v=128 + (500*rgb[i].r - 419*rgb[i].g + 81*rgb[i].b)/1000; |

This solution almost doubled the framerate. But it was still not enough and we had to dive deep in the magic world of MMX/SSE instructions. The details for the next issue.

Let’s join the information stream

Posted by Alessandro Pignotti in Uncategorized on February 1, 2009

Hello world,

Let’s introduce ourselves. We are a bunch of students at Scuola Superiore Sant’Anna in Pisa, Italy. We often start (less often finish) a lot of projects here. This is primarily a place for us to write down our ideas. Maybe someone out there could find them useful as well.

Our main interests are linked to computing, programming and security. But maybe other topics will be touched, who knows...

See you soon

Let’s join the information stream

Posted by Alessandro Pignotti in Uncategorized on February 1, 2009

Hello world,

Let’s introduce ourselves. We are a bunch of students at Scuola Superiore Sant’Anna in Pisa, Italy. We often start (less often finish) a lot of projects here. This is primarily a place for us to write down our ideas. Maybe someone out there could find them useful as well.

Our main interests are linked to computing, programming and security. But maybe other topics will be touched, who knows...

See you soon