Archive for October, 2009

Allocating aligned memory

Posted by Alessandro Pignotti in Coding tricks on October 29, 2009

Just a quick note that may be useful to someone else. As you may know SSE2 introduced a new instruction: MOVDQA (MOVe Double Quadword Aligned). This is used to move 128 bit (16 bytes) of data from/to memory/xmm registers. This instruction only works if the data is aligned the the 16 byte boundary. There is also another instruction for the unaligned case, but the aligned version is way faster. So let’s summarize some techniques to get an aligned memory area

- For local, static and member variables you can append __attribute__ (( aligned (16 ) ) to the type definition. Example:

- For dynamically allocated memory the usual malloc is not enough, but there is a posix_memalign which has the semantics that we need. It is defined as:

struct A { int val; } __attribute__ ((aligned ( 16 ) );

int posix_memalign(void **memptr, size_t alignment, size_t size);

So we have to pass a pointer to the pointer that will receive the newly allocated memory, the required alignment (which has to be a power of two) and the allocation size. Memory allocated this way can (at least on the glibc implementation) be freed using the usual free function.

aligned memory, glibc, linux, MMX, performance, SSE, x86

Allocating aligned memory

Posted by Alessandro Pignotti in Coding tricks on October 29, 2009

Just a quick note that may be useful to someone else. As you may know SSE2 introduced a new instruction: MOVDQA (MOVe Double Quadword Aligned). This is used to move 128 bit (16 bytes) of data from/to memory/xmm registers. This instruction only works if the data is aligned the the 16 byte boundary. There is also another instruction for the unaligned case, but the aligned version is way faster. So let’s summarize some techniques to get an aligned memory area

- For local, static and member variables you can append __attribute__ (( aligned (16 ) ) to the type definition. Example:

- For dynamically allocated memory the usual malloc is not enough, but there is a posix_memalign which has the semantics that we need. It is defined as:

struct A { int val; } __attribute__ ((aligned ( 16 ) );

int posix_memalign(void **memptr, size_t alignment, size_t size);

So we have to pass a pointer to the pointer that will receive the newly allocated memory, the required alignment (which has to be a power of two) and the allocation size. Memory allocated this way can (at least on the glibc implementation) be freed using the usual free function.

aligned memory, glibc, linux, MMX, performance, SSE, x86

Talking about CPU architectures: Then it came assembly chain

Posted by Alessandro Pignotti in Bar discussions on October 22, 2009

As we’ve seen in the previous essay in a simple processor design each instruction needs several cycles to be completed. Each instruction passes several steps: first it’s loaded from memory (FETCH0 state), then it’s decoded (FETCH1 state) and the appropriate code path is selected to compute the operation results. In the my example I’ve not included other two essential steps: operands fetching and results storing on memory. This sequential approach has a big drawback, as for most of the time the CPU circuits (such as the memory interface or the ALU) are not doing useful work. If on average each instruction takes five steps to complete, then the processor is only executing one instruction every five cycles. To solve this problem we can introduce the pipeline.

The pipeline concept is borrowed from the industrial assembly line. The basic idea is to decouple the sequential steps of each instruction by inserting buffer registers in between. Each stage of the pipeline is isolated, and on each clock cycle it can take the input from the register upstream and output the results into the register downstream. Of course not every instruction needs processing by all stages (for example it may or may not access memory) and so each stage has to have some kind of No Operation mode to pass trough the unmodified input register to the output.

A simple pseudo-verilog description of a pipelined processor follows. We are assuming that the instruction and data memory are physically separate: an Havard like architecture. This is not true in current mainstream architectures, as both instruction and data are stored in RAM, but may be considered true to a large extend if there is a fast instruction cache. Moreover it has to be noted that although the access_memory module is referenced both in the input fetching and output storing stages, it can only correctly serve one request at a time, and so some kind of arbitration has to be used. We can arbitrarily decide that reads are prioritized over write, but in case of a collision the operation will be for sure not correct, though safe for the electronics. The register_file module is just a multiplexer for the registers, it will output the value of the registers for the provided indexes. The module it’s used both in the operands fetching and writeback stages, but it’s safe because it allows two register reads and one write and the same time (three way access).

This toy processor is inspired from MIPS architecture and as you can see it uses fixed length instructions. But I’ve also tried to design something more similar to an x86 processor. The instruction set (which I’m too lazy to define extensively) could allow both register-register and register-memory operation, and output to either memory or registers. No indirect memory access is allowed, sorry. As the instructions are four byte long probably only a 16 or 24 bit flat address space could be accessed.

always @(posedge clock)

begin

//

//Instructrion fetch stage

FETCHED_INSTRUCTION <= get_instruction_memory(PC); PC <= PC+4;

//

//Instruction decoding stage

{OPCODE_0, OPERAND1_TYPE, REG1_INDEX, REG2_INDEX, MEM_ADDRESS_0, WRITE_TO_MEMORY_0, DEST_REG_0} <= decode_instruction(FETCHED_INSTRUCTION);

//

//Operands fecthing stage, some register will be forwarded to the next stages

OPERAND1 <= (OPERAND1_TYPE==REG) ?

read_register_file(REG1_INDEX, REG2_INDEX)[31:0] :

access_memory(MEM_ADDRESS_0,READ,UNSPECIFIED);

OPERAND2 <= register_file(REG1_INDEX,REG2_INDEX)[63:32];

MEM_ADDRESS_1 <= MEM_ADDRESS_0;

WRITE_TO_MEMORY_1 <= WRITE_TO_MEMORY_0;

DEST_REG_1 <= DEST_REG_0;

OPCODE_1 <= OPCODE_0;

//

//Execution stage

RESULT <= alu(OPCODE_1,OPERAND1,OPERAND2);

MEM_ADDRESS_2 <= MEM_ADDRESS_1;

WRITE_TO_MEMORY_2 <= WRITE_TO_MEMORY_1;

DEST_REG_2 <= DEST_REG_1;

//

//Writeback stage

(WRITE_TO_MEMORY_2) ? access_memory(MEM_ADDRESS_2,WRITE,RESULT) : write_register_file(DEST_REG_2, RESULT);

end

Several considerations can be made on this design. First of all extreme care is needed from the compiler (or the user, if it’s crazy enough to write assembly manually), because there are several hazards, not only as said previously on concurrent read/write memory access, but also on registers. As the result of an instruction is written back to the register file only at the end of the pipeline it’s not legal to access a result before five more instruction has been executed. To guarantee memory access validity it’s enough to avoid issuing a memory write two instruction after a memory load. If no useful instruction can be issued during this forced waiting, a NOP should be inserted.

Real world architecture employs interlocking techniques which takes care of stopping (stalling) the pipeline when an hazard could occur. I’ll describe stalling in more detail in another essay. Anyway if a pipeline stage, such us the ALU for complicated computation (for example integer division), needs more than a cycle to complete it will stall the provious stages of the pipeline, and insert so called bubbles into the later stage. Bubbles are basically safe No Operation input states, the NOP opcode in any architecture is just an explicit pipeline bubble. Even in modern interlocked architecture correct instruction scheduling is extremely useful to gain performance as pipeline bubbles actually waste execution time.

That’s all for now, in the previous post I forgot to mention the ever failing, but very nice Very Long Instruction Word (VLIW) approach. I’m of course going to talk about that to sometime.

assembly, Cpu architectir, CPU architectures, CPU de, CPU design, mips, performance, pipeline, Verilog, x86

Talking about CPU architectures: Then it came assembly chain

Posted by Alessandro Pignotti in Bar discussions on October 22, 2009

As we’ve seen in the previous essay in a simple processor design each instruction needs several cycles to be completed. Each instruction passes several steps: first it’s loaded from memory (FETCH0 state), then it’s decoded (FETCH1 state) and the appropriate code path is selected to compute the operation results. In the my example I’ve not included other two essential steps: operands fetching and results storing on memory. This sequential approach has a big drawback, as for most of the time the CPU circuits (such as the memory interface or the ALU) are not doing useful work. If on average each instruction takes five steps to complete, then the processor is only executing one instruction every five cycles. To solve this problem we can introduce the pipeline.

The pipeline concept is borrowed from the industrial assembly line. The basic idea is to decouple the sequential steps of each instruction by inserting buffer registers in between. Each stage of the pipeline is isolated, and on each clock cycle it can take the input from the register upstream and output the results into the register downstream. Of course not every instruction needs processing by all stages (for example it may or may not access memory) and so each stage has to have some kind of No Operation mode to pass trough the unmodified input register to the output.

A simple pseudo-verilog description of a pipelined processor follows. We are assuming that the instruction and data memory are physically separate: an Havard like architecture. This is not true in current mainstream architectures, as both instruction and data are stored in RAM, but may be considered true to a large extend if there is a fast instruction cache. Moreover it has to be noted that although the access_memory module is referenced both in the input fetching and output storing stages, it can only correctly serve one request at a time, and so some kind of arbitration has to be used. We can arbitrarily decide that reads are prioritized over write, but in case of a collision the operation will be for sure not correct, though safe for the electronics. The register_file module is just a multiplexer for the registers, it will output the value of the registers for the provided indexes. The module it’s used both in the operands fetching and writeback stages, but it’s safe because it allows two register reads and one write and the same time (three way access).

This toy processor is inspired from MIPS architecture and as you can see it uses fixed length instructions. But I’ve also tried to design something more similar to an x86 processor. The instruction set (which I’m too lazy to define extensively) could allow both register-register and register-memory operation, and output to either memory or registers. No indirect memory access is allowed, sorry. As the instructions are four byte long probably only a 16 or 24 bit flat address space could be accessed.

always @(posedge clock)

begin

//

//Instructrion fetch stage

FETCHED_INSTRUCTION <= get_instruction_memory(PC); PC <= PC+4;

//

//Instruction decoding stage

{OPCODE_0, OPERAND1_TYPE, REG1_INDEX, REG2_INDEX, MEM_ADDRESS_0, WRITE_TO_MEMORY_0, DEST_REG_0} <= decode_instruction(FETCHED_INSTRUCTION);

//

//Operands fecthing stage, some register will be forwarded to the next stages

OPERAND1 <= (OPERAND1_TYPE==REG) ?

read_register_file(REG1_INDEX, REG2_INDEX)[31:0] :

access_memory(MEM_ADDRESS_0,READ,UNSPECIFIED);

OPERAND2 <= register_file(REG1_INDEX,REG2_INDEX)[63:32];

MEM_ADDRESS_1 <= MEM_ADDRESS_0;

WRITE_TO_MEMORY_1 <= WRITE_TO_MEMORY_0;

DEST_REG_1 <= DEST_REG_0;

OPCODE_1 <= OPCODE_0;

//

//Execution stage

RESULT <= alu(OPCODE_1,OPERAND1,OPERAND2);

MEM_ADDRESS_2 <= MEM_ADDRESS_1;

WRITE_TO_MEMORY_2 <= WRITE_TO_MEMORY_1;

DEST_REG_2 <= DEST_REG_1;

//

//Writeback stage

(WRITE_TO_MEMORY_2) ? access_memory(MEM_ADDRESS_2,WRITE,RESULT) : write_register_file(DEST_REG_2, RESULT);

end

Several considerations can be made on this design. First of all extreme care is needed from the compiler (or the user, if it’s crazy enough to write assembly manually), because there are several hazards, not only as said previously on concurrent read/write memory access, but also on registers. As the result of an instruction is written back to the register file only at the end of the pipeline it’s not legal to access a result before five more instruction has been executed. To guarantee memory access validity it’s enough to avoid issuing a memory write two instruction after a memory load. If no useful instruction can be issued during this forced waiting, a NOP should be inserted.

Real world architecture employs interlocking techniques which takes care of stopping (stalling) the pipeline when an hazard could occur. I’ll describe stalling in more detail in another essay. Anyway if a pipeline stage, such us the ALU for complicated computation (for example integer division), needs more than a cycle to complete it will stall the provious stages of the pipeline, and insert so called bubbles into the later stage. Bubbles are basically safe No Operation input states, the NOP opcode in any architecture is just an explicit pipeline bubble. Even in modern interlocked architecture correct instruction scheduling is extremely useful to gain performance as pipeline bubbles actually waste execution time.

That’s all for now, in the previous post I forgot to mention the ever failing, but very nice Very Long Instruction Word (VLIW) approach. I’m of course going to talk about that to sometime.

assembly, Cpu architectir, CPU architectures, CPU de, CPU design, mips, performance, pipeline, Verilog, x86

Talking about CPU architectures: The beginning

Posted by Alessandro Pignotti in Bar discussions on October 20, 2009

Some days ago I was talking about multicore systems and modern CPU technologies with a younger engineering student at Scuola Superiore Sant’Anna. I was quite surprised by how much misconceptions and hype surrounds this topic, even for people that actually study computer engineering. Especially considering that the courses of the University of Pisa are quite good when it comes to low level hardware stuff (computer engineering was born by branching electronic engineering, and the legacy is still quite evident).

So I’ll try to write some articles about CPU architectures, from the basic to advanced topics... well... as advanced as my current knowledge goes, still better than nothing.

Ok, for this essay I’ll basically reference the simple processor design that we study in our awesome Logic Circuits course (thanks Prof. Corsini, even if I’ve not seen a single class of it). The code presented is written is something hopefully similar to Verilog, the hardware description language.

This processor is basically a simple state machine. The internal state is stored in some synchronous register, usually implemented using so called edge-triggered flip-flops. There is a clock source which generates a square wave. The clock is used as an input to registers, which are allowed to get a new value only on the raising edge of the wave. The new value of the registers is usually computed by stateless asynchronous circuits.

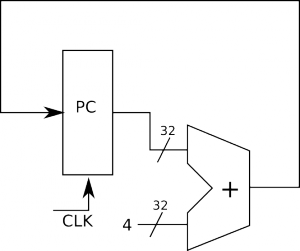

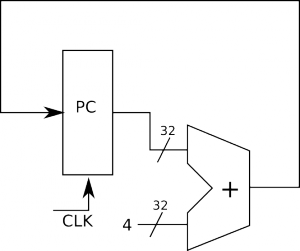

A simple circuit to get the address of the next instruction: the new address is computed during the clock cycle by the asynchronous adder and assigned at the next rising edge of the clock

This means that the output depends only on the input, and the clock signal is not used. An example of this kind of circuits could be the adder, or the more complex ALU. Those circuits takes some time to complete their work, the adder, for example, has to propagate the carry information from the least significant bit to the most significant bit. Before the computation is complete the output state undergoes several spurious transitions (add gif) which will be ignored by the following register, as the new value will only be captured on the raising edge of the clock.

This allows us to understand two concepts:

- The faster the clock is, the more new states can be computed, and so the more operations can be done. So this is what the vendors are talking about when they market they several GhZ CPUs.

- Why can’t the clock be infinitely fast? Because to guarantee that all the spurious transitions from asynchronous circuits will be discarted the clock cycle time has to be at least a little longer than the longest settle time of those circuits.

For a long time during the previous years it was easy to pump up the clock speeds, from the 4 Mhz of the first 8088 to the 4 Ghz of the last Pentiums, as the progress in CMOS techlogies allowed to make the circuits smaller and smaller, which also means basically faster and faster. But after a while this had to stop, even if transistors are still getting smaller. The main problem right now is that power consumption and heat production go with the square of the clock frequency, which poses a very heavy upper limit to the reasonable clock speed, if you’re not willing to use liquid refrigeration.

This simple 3 bit adder needs some time to add 011 (3) and 001 (1) because it has to propagate carry information

So, back to the CPU internals. One of the registers has a special purpose: it keeps the state of the processor and it’s usually called STAR (STAtus Register). So the ipotetical pseudo-verilog for this processor would be:

reg [31:0] MBR, STAR, EAX, PC;

always @(posedge clock)

begin

case(STAR)

FETCH0: MBR <= get_memory(PC); STAR <= FETCH1; PC <= PC+4;

FETCH1: STAR <= decode_opcode(MBR);

...

INC0: EAX <= alu(OP_ADD,EAX,1) STAR <= FETCH0;

...

HLT: STAR<=HLT;

end

This would be a very simple, incomplete and totally useless fixed instruction length CPU, which can only increment the accumulator and halt itself. Let’s explain this code a bit. MBR, STAR, EAX and PC are defined as registers, in this case they are 32 bits wide. The STAR register could be larger or smaller depending on the number of possible internal state. The MBR is the Master Buffer Register and it’s used as a temporary storage for computations which should not be seen by the user, while EAX and PC are the accumulator and the program counter which we’re all used to. The \emph{always} syntax means that the actions has to be taken on the raising edge of the clock. Registers which are assigned in this block are usually implemented using an array of edge triggered flip-flops. The <= symbols means non blocking assignment. All those assignments are done in parallel and they will only be effective at the following cycle. The FETCH,INC and HLT are just symbolic version for some numerical constants, which represent the state. The get_memory, decode_opcode and alu symbols are all asynchronous circuits, they will take their inputs and generate the output after some time, hopefully before the next clock cycle. It must be noted that the get_memory in the real world cannot be modeled as an asynchronous circuit, as it usually takes several cycle to get some value out of memory.

You can see that in the FETCH0 case we use the PC both as an input and output, but this is perfectly safe as all the computation will be based on the values at the beginning of the cycle, and the new values will be gathered at the end of it. How to implement the case in hardware? Well it’s quite easy because we can exploit the for free (modulo power consumption) parallelism of electronics to compute all the possible outcome from each instruction and then use multiplexer to get the desired one. Of course to avoid races in the inputs we also need multiplexer on the input side of common circuitry, such as the alu.

So... this is really the basic simple way to build a computational machine. Much new interesting stuff has been added to this now, and I’ll spend some words on that in the next essays. The topics that I would like to cover are pipelined, superscalar, vectorial and multicore CPUs. Maybe I’ll also take a brief look at the new trend (or hype): heterogenous CPUs, such as IBMs Cell. Comment and suggestion are, as always, really welcome.

assembly, Cell, Corsini, CPU architectures, CPU design, Verilog

Talking about CPU architectures: The beginning

Posted by Alessandro Pignotti in Bar discussions on October 20, 2009

Some days ago I was talking about multicore systems and modern CPU technologies with a younger engineering student at Scuola Superiore Sant’Anna. I was quite surprised by how much misconceptions and hype surrounds this topic, even for people that actually study computer engineering. Especially considering that the courses of the University of Pisa are quite good when it comes to low level hardware stuff (computer engineering was born by branching electronic engineering, and the legacy is still quite evident).

So I’ll try to write some articles about CPU architectures, from the basic to advanced topics... well... as advanced as my current knowledge goes, still better than nothing.

Ok, for this essay I’ll basically reference the simple processor design that we study in our awesome Logic Circuits course (thanks Prof. Corsini, even if I’ve not seen a single class of it). The code presented is written is something hopefully similar to Verilog, the hardware description language.

This processor is basically a simple state machine. The internal state is stored in some synchronous register, usually implemented using so called edge-triggered flip-flops. There is a clock source which generates a square wave. The clock is used as an input to registers, which are allowed to get a new value only on the raising edge of the wave. The new value of the registers is usually computed by stateless asynchronous circuits.

A simple circuit to get the address of the next instruction: the new address is computed during the clock cycle by the asynchronous adder and assigned at the next rising edge of the clock

This means that the output depends only on the input, and the clock signal is not used. An example of this kind of circuits could be the adder, or the more complex ALU. Those circuits takes some time to complete their work, the adder, for example, has to propagate the carry information from the least significant bit to the most significant bit. Before the computation is complete the output state undergoes several spurious transitions (add gif) which will be ignored by the following register, as the new value will only be captured on the raising edge of the clock.

This allows us to understand two concepts:

- The faster the clock is, the more new states can be computed, and so the more operations can be done. So this is what the vendors are talking about when they market they several GhZ CPUs.

- Why can’t the clock be infinitely fast? Because to guarantee that all the spurious transitions from asynchronous circuits will be discarted the clock cycle time has to be at least a little longer than the longest settle time of those circuits.

For a long time during the previous years it was easy to pump up the clock speeds, from the 4 Mhz of the first 8088 to the 4 Ghz of the last Pentiums, as the progress in CMOS techlogies allowed to make the circuits smaller and smaller, which also means basically faster and faster. But after a while this had to stop, even if transistors are still getting smaller. The main problem right now is that power consumption and heat production go with the square of the clock frequency, which poses a very heavy upper limit to the reasonable clock speed, if you’re not willing to use liquid refrigeration.

This simple 3 bit adder needs some time to add 011 (3) and 001 (1) because it has to propagate carry information

So, back to the CPU internals. One of the registers has a special purpose: it keeps the state of the processor and it’s usually called STAR (STAtus Register). So the ipotetical pseudo-verilog for this processor would be:

reg [31:0] MBR, STAR, EAX, PC;

always @(posedge clock)

begin

case(STAR)

FETCH0: MBR <= get_memory(PC); STAR <= FETCH1; PC <= PC+4;

FETCH1: STAR <= decode_opcode(MBR);

...

INC0: EAX <= alu(OP_ADD,EAX,1) STAR <= FETCH0;

...

HLT: STAR<=HLT;

end

This would be a very simple, incomplete and totally useless fixed instruction length CPU, which can only increment the accumulator and halt itself. Let’s explain this code a bit. MBR, STAR, EAX and PC are defined as registers, in this case they are 32 bits wide. The STAR register could be larger or smaller depending on the number of possible internal state. The MBR is the Master Buffer Register and it’s used as a temporary storage for computations which should not be seen by the user, while EAX and PC are the accumulator and the program counter which we’re all used to. The \emph{always} syntax means that the actions has to be taken on the raising edge of the clock. Registers which are assigned in this block are usually implemented using an array of edge triggered flip-flops. The <= symbols means non blocking assignment. All those assignments are done in parallel and they will only be effective at the following cycle. The FETCH,INC and HLT are just symbolic version for some numerical constants, which represent the state. The get_memory, decode_opcode and alu symbols are all asynchronous circuits, they will take their inputs and generate the output after some time, hopefully before the next clock cycle. It must be noted that the get_memory in the real world cannot be modeled as an asynchronous circuit, as it usually takes several cycle to get some value out of memory.

You can see that in the FETCH0 case we use the PC both as an input and output, but this is perfectly safe as all the computation will be based on the values at the beginning of the cycle, and the new values will be gathered at the end of it. How to implement the case in hardware? Well it’s quite easy because we can exploit the for free (modulo power consumption) parallelism of electronics to compute all the possible outcome from each instruction and then use multiplexer to get the desired one. Of course to avoid races in the inputs we also need multiplexer on the input side of common circuitry, such as the alu.

So... this is really the basic simple way to build a computational machine. Much new interesting stuff has been added to this now, and I’ll spend some words on that in the next essays. The topics that I would like to cover are pipelined, superscalar, vectorial and multicore CPUs. Maybe I’ll also take a brief look at the new trend (or hype): heterogenous CPUs, such as IBMs Cell. Comment and suggestion are, as always, really welcome.

assembly, Cell, Corsini, CPU architectures, CPU design, Verilog

-

You are currently browsing the archives for October, 2009

Blogroll

- 0x1BADFEED

- 0x1BADFEED

- Antonio Gulli’s coding playground

- Antonio Gulli’s coding playground

- Blog degli Allievi della Scuola Sant'Anna

- Blog degli Allievi della Scuola Sant'Anna

- Forum degli Allievi

- Forum degli Allievi

- Giuseppe Lipari's Scacciamennule

- Giuseppe Lipari's Scacciamennule

- Sant’Anna School of Advanced Studies

- Sant’Anna School of Advanced Studies

Archives

- April 2014

- March 2014

- January 2014

- December 2013

- November 2013

- October 2013

- July 2013

- May 2013

- April 2013

- March 2013

- January 2013

- October 2012

- June 2012

- May 2012

- April 2012

- December 2011

- July 2011

- May 2011

- April 2011

- March 2011

- January 2011

- December 2010

- November 2010

- October 2010

- September 2010

- August 2010

- July 2010

- June 2010

- May 2010

- March 2010

- February 2010

- January 2010

- October 2009

- September 2009

- June 2009

- May 2009

- April 2009

- March 2009

- February 2009